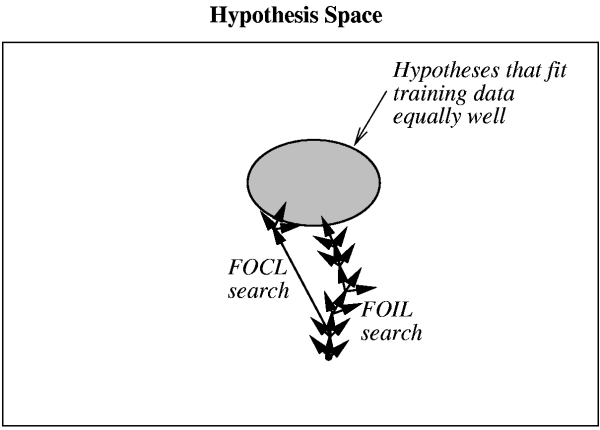

| Inductive Learning | Analytical Learning | |

| Goal | Hypothesis fits data | Hypothesis fits domain theory |

| Justification | Statistical inference | Deductive Inference |

| Advantages | Requires little prior knowledge | Learns from scarce data |

| Pitfalls | Scarce data, incorrect bias | Imperfect domain theory |

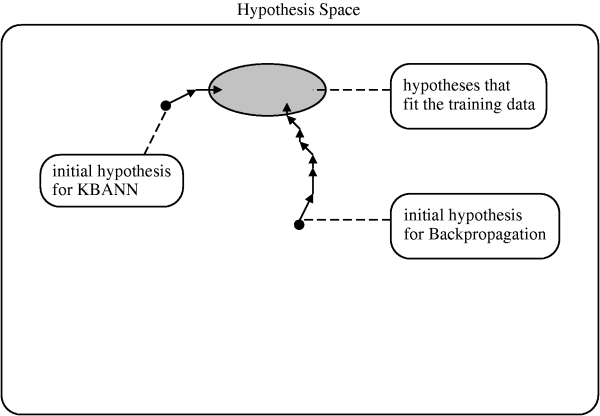

temperature, not insurance),

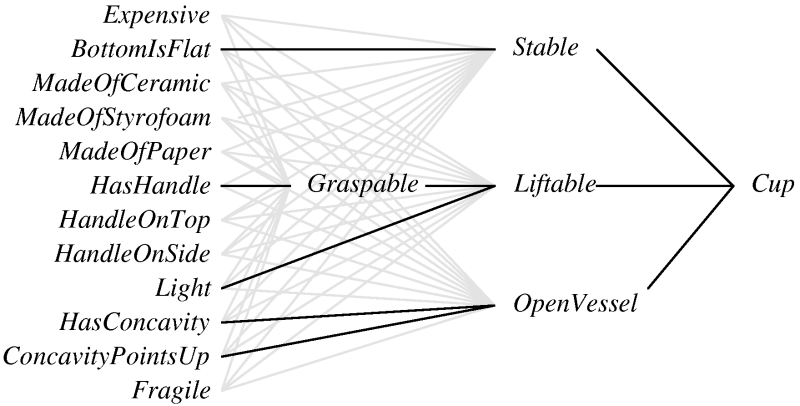

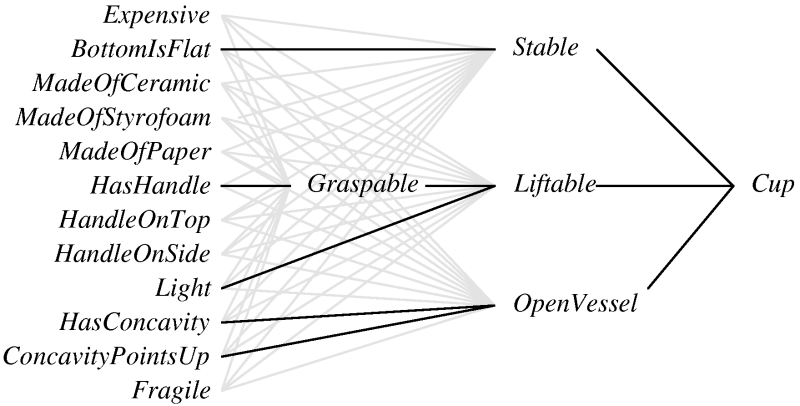

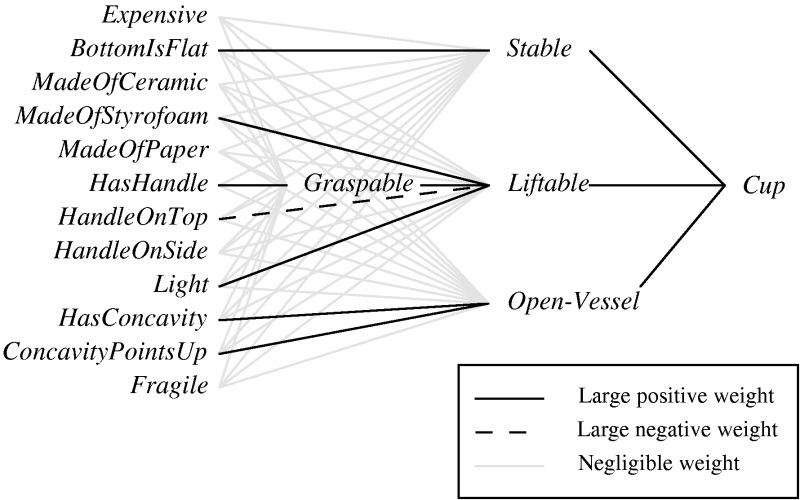

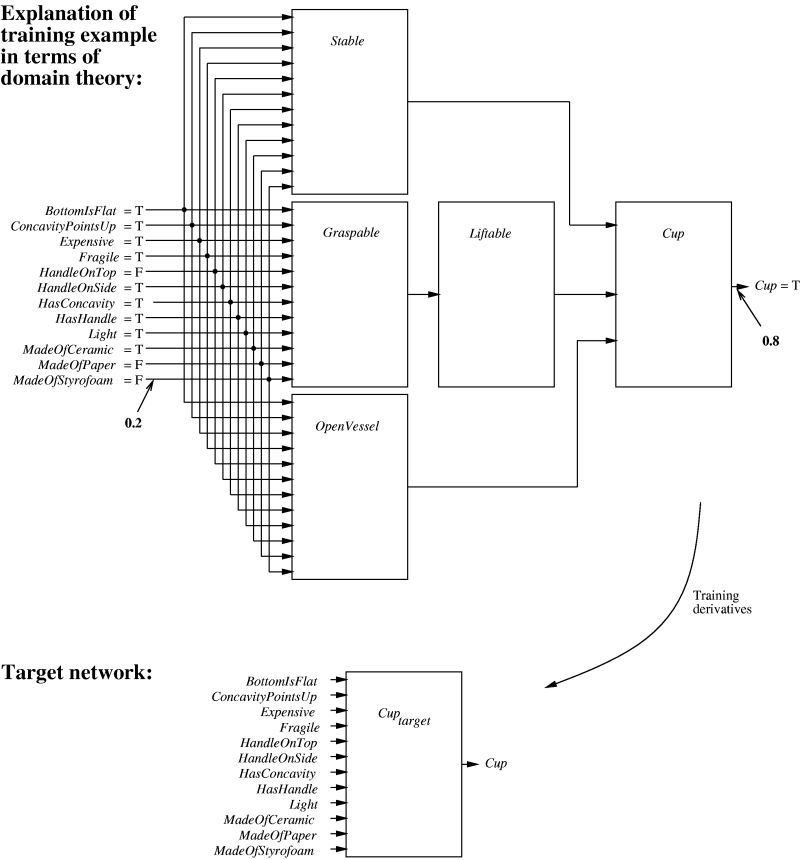

then refine using data.Cup ← Stable, Liftable, OpenVessel

Stable ← BottomIsFlat

Liftable ← Graspable, Light

Graspable ← HasHandle

OpenVessel ← HasConcavity, ConcavityPointsUp

| Cup | Non-Cups | |||||||||

| BottomIsFlat | X | X | X | X | X | X | X | X | ||

| ConcavityPointsUp | X | X | X | X | X | X | X | |||

| Expensive | X | X | X | X | ||||||

| Fragile | X | X | X | X | X | X | ||||

| HandleOnTop | X | X | ||||||||

| HandleOnSide | X | X | X | |||||||

| HasConcavity | X | X | X | X | X | X | X | X | X | |

| HasHandle | X | X | X | X | X | |||||

| Light | X | X | X | X | X | X | X | X | ||

| MadeOfCeramic | X | X | X | X | ||||||

| MadeOfPaper | X | X | ||||||||

| MadeOfStyrofoam | X | X | X | X | ||||||

This talk available at http://jmvidal.cse.sc.edu/talks/indanalytical/

Copyright © 2009 José M. Vidal

.

All rights reserved.

17 April 2003, 12:26PM