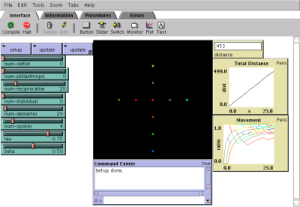

Figure 1: Click on the image in order to see the applet and get the source code

For this problem set I have implement the problem and solution from

The basic problem is that we have a number of agents (known as "deliverators"), each one is assigned a number of deliveries. All deliverators start at the "depot", also known as the center of the screen. From this center emmanate a number of "spokes", each spoke has three possible delivery points along it. When the deliverators are in the depot they look at the next task that they have to deliver and try to get some other unsuspecting deliverator to do it for them.

In the paper Sandip studies a technique he calls "reciprocity" which is like tit-for-tat but instead of making a definitive desition based on the last move, the agent makes a stochastic decision based on the past history. That is, if you've been nice to me in the past that increases the probability that I will be nice to you in the future. Sandip shows how reciprocity causes the overall distance travelled by all the agents to decrease while, he claims, the agents continue to act selfishly.

The problem is that agents that implement this reciprocity function are not really being rational. Why should I flip a coin to decide wether to be nice to you when I know that you have not been nice to me most of the time?

For this problem set you will try to develop an incentive-compatible protocol for the deliverators to exchange messages. The protocol should include some kind of simple learning on the part of the deliverators. That is, start with the assumption that each deliverator is a rational utility-maximizing agent, but who is willing to take some risks if those risks might lead to a reward later on, and develop a set of rules that these agents can follow so that not only will they be happily greedy, but also the social welfare will be maximized. The key points to remember are:

This problem set is more open-ended than the previous one. As such, it will require you to justify your choices with more detailed prose. You need to tell a coherent story, and I mean "story" literally as in "There were all these pizza deliverators who got paid by the hour but had to pay for their own gas...". Make sure the deliverators in your story are behaving rationaly.

Sandip continued working with this model in

This problem set is to be done individually. You cannot ask or receive help from anyone. As will all the problem sets, you will hand them in using our department's dropbox. For the netlogo problem sets you will hand in only one file, your .nlogo file. You will use the information tab of the program for writing your detailed explanation of the techniques you used, how the program works, how to set the controls to obtain the different behaviors you want to show, etc. The information tab needs to start with:

Name: