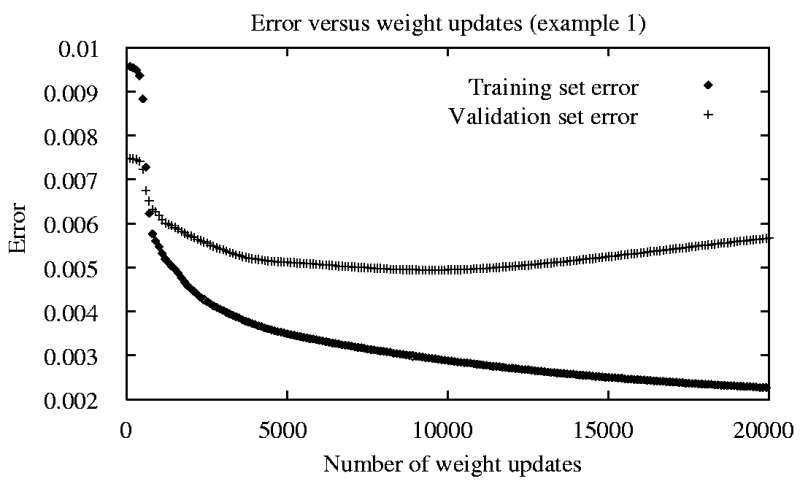

- The error decreases monotonically over time as a function of the training set.

- But, when measure against the validation set the function is non-monotonic. This line is said to measure the generalization accuracy of the network.

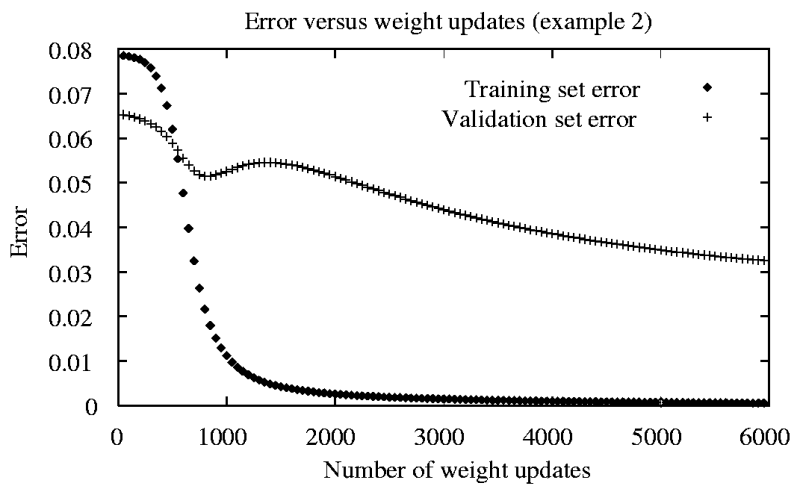

- The second figure shows that it is hard to pick the min (the validation curve can have local minima).

- Some techniques used to fight overfitting include

- Decrease each weight by some small factor during each iteration (weight decay). Keep them small so as to bias against learning complex surfaces.

- Provide a validation set and use it to monitor the error. But, be careful not to find a minimum too early.

- With small data sets, use k-fold cross-validation: divide pile into k disjoint sets. Each time one of the sets is the validation and the other k-1 are the training data.